Differences between Backdoor Attack and Poisoning Attack

编辑日期: 2024-11-28 文章阅读: 次

In the context of machine learning security, Backdoor Attacks and Poisoning Attacks are both types of adversarial attacks, but they have distinct goals and implementation methods. Below is a detailed comparison of the two, including their definitions, objectives, methods, and impacts.

Definition

- Backdoor Attack: The attacker injects specific triggers (e.g., particular input patterns or specific values) into the training phase, causing the model to output attacker-chosen results when the trigger is present, while behaving normally otherwise.

- Poisoning Attack: The attacker pollutes the training dataset with malicious samples to degrade the overall performance of the model or cause it to output incorrect results in specific scenarios.

Objective

- Backdoor Attack: To control the model's output upon the presence of specific inputs, usually covert and targeted.

- Poisoning Attack: To disrupt the model's overall performance or cause it to fail in specific tasks, usually more apparent.

Implementation

Backdoor Attack

- Inject malicious samples with specific triggers.

- Triggers can be particular pixel patterns, text fragments, etc.

- Malicious samples do not affect the model's performance under normal conditions but alter the output when triggers are present.

Poisoning Attack

- Add malicious samples to the training dataset.

- Mix malicious samples with normal samples, causing the model to learn incorrect patterns during training.

- Malicious samples impact the overall performance of the model, causing it to perform poorly on the test set.

Impact

Backdoor Attack

- The model performs well on normal inputs.

- Outputs attacker-chosen results when triggered.

- Difficult to detect, as the attack is only effective with specific inputs.

Poisoning Attack

- The model's overall performance is degraded.

- May cause the model to fail on specific tasks.

- Easier to detect due to the noticeable degradation in performance.

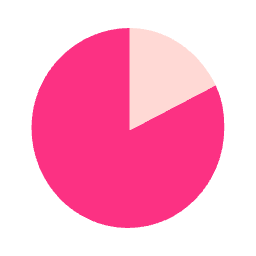

Comparison Table

| Feature | Backdoor Attack | Poisoning Attack |

|---|---|---|

| Definition | Injects specific triggers in training | Pollutes the training dataset |

| Objective | Control output with specific inputs | Degrade overall performance |

| Implementation | Uses specific input patterns (triggers) | Adds malicious samples to training data |

| Impact | Normal performance unless triggered | Overall performance degradation |

| Detection | Difficult to detect | Easier to detect |

Example Scenarios

- Backdoor Attack:

- An image classification model is trained to recognize objects. The attacker injects images with a small, almost invisible sticker (the trigger). When this sticker is present in new images, the model misclassifies the objects, even though it performs well on clean images.

- Poisoning Attack:

- An email spam filter is trained on a dataset that includes both spam and non-spam emails. The attacker adds cleverly crafted spam emails that look like legitimate emails to the training set. As a result, the filter becomes less effective at detecting spam, as it learns to misclassify some spam emails as non-spam.

By understanding these differences, researchers and practitioners can develop more effective defenses against these types of attacks, ensuring the robustness and security of machine learning models.