Visualizing the Loss Landscape of a Neural Network

编辑日期: 2024-07-10 文章阅读: 次

refer: https://mathformachines.com/posts/visualizing-the-loss-landscape/

Training a neural network is an optimization problem, the problem of minimizing its loss. The loss function \(𝐿𝑜𝑠𝑠_x(𝑤)\) of a neural network is the error of its predictions over a fixed dataset \(𝑋\) as a function of the network’s weights or other parameters \(𝑤\). The loss landscape is the graph of this function, a surface in some usually high-dimensional space. We can imagine the training of the network as a journey across this surface: Weight initialization drops us onto some random coordinates in the landscape, and then SGD guides us step-by-step along a path of parameter values towards a minimum. The success of our training depends on the shape of the landscape and also on our manner of stepping across it.

As a neural network typically has many parameters (hundreds or millions or more), this loss surface will live in a space too large to visualize. There are, however, some tricks we can use to get a good two-dimensional of it and so gain a valuable source of intuition. I learned about these from Visualizing the Loss Landscape of Neural Nets by Li, et al. (arXiv).

Let’s start with the two-dimensional case to get an idea of what we’re looking for.

A single neuron with one input computes 𝑦=𝑤𝑥+𝑏𝑦=𝑤𝑥+𝑏 and so has only two parameters: a weight 𝑤𝑤 for the input 𝑥𝑥 and a bias 𝑏𝑏. Having only two parameters means we can view every dimension of the loss surface with a simple contour plot, the bias along one axis and the single weight along the other.

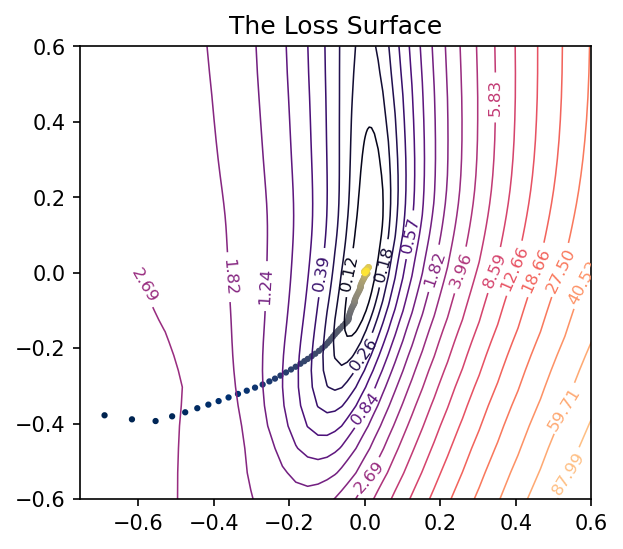

Traversing the loss landscape of a linear model with SGD.

After training this simple linear model, we’ll have a pair of weights 𝑤𝑐𝑤𝑐 and 𝑏𝑐𝑏𝑐 that should be approximately where the minimal loss occurs – it’s nice to take this point (𝑤𝑐,𝑏𝑐)(𝑤𝑐,𝑏𝑐) as the center of the plot. By collecting the weights at every step of training, we can trace out the path taken by SGD across the loss surface towards the minimum.

Our goal now is to get similar kinds of images for networks with any number of parameters.

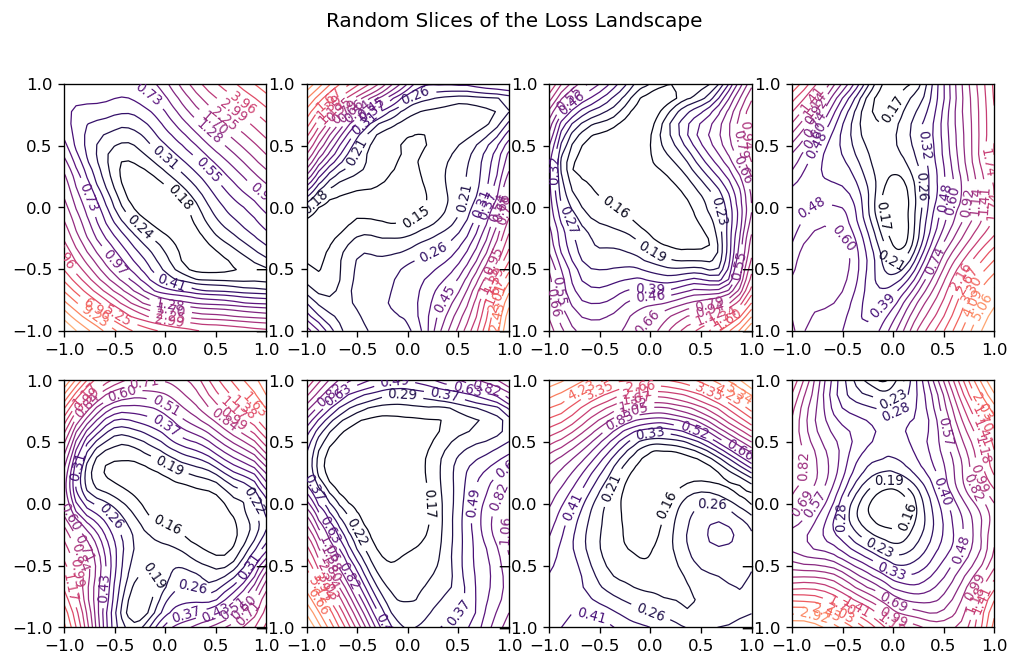

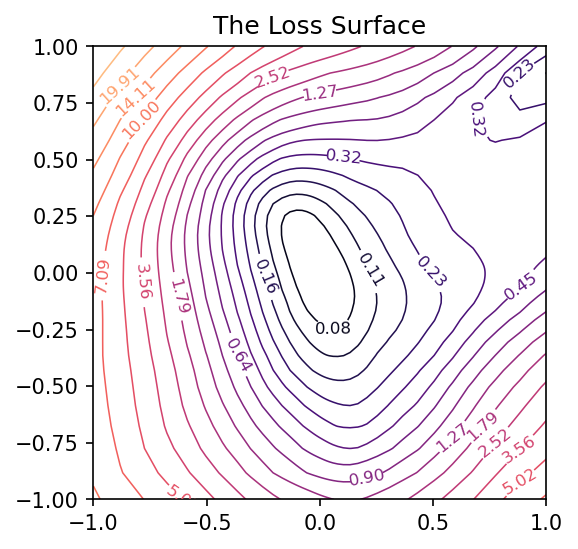

How can we view the loss landscape of a larger network? Though we can’t anything like a complete view of the loss surface, we can still get a view as long as we don’t especially care what view we get; that is, we’ll just take a random 2D slice out of the loss surface and look at the contours that slice, hoping that it’s more or less representative.

This slice is basically a coordinate system: we need a center (the origin) and a pair of direction vectors (axes). As before, let’s take the weights 𝑊𝑐𝑊𝑐 from the trainined network to act as the center, and the direction vectors we’ll generate randomly.

Now the loss at some point (𝑎,𝑏)(𝑎,𝑏) on the graph is taken by setting the weights of the network to 𝑊𝑐+𝑎𝑊0+𝑏𝑊1𝑊𝑐+𝑎𝑊0+𝑏𝑊1 and evaluating it on the given data. Plot these losses across some range of values for 𝑎𝑎 and 𝑏𝑏, and we can produce our contour plot.

Random slices through a high-dimensional loss surface

Random slices through a high-dimensional loss surface

We might worry that the plot would be distorted if the random vectors we chose happened to be close together, even though we’ve plotted them as if they were at a right angle. It’s a nice fact about high-dimensional vector spaces, though, that any two random vectors you choose from them will usually be close to orthogonal.

Here’s how we could implement this:

import matplotlib.pyplot as plt

import numpy as np

import tensorflow as tf

from tensorflow import keras

from tensorflow.keras import callbacks, layers

class RandomCoordinates(object):

def __init__(self, origin):

self.origin_ = origin

self.v0_ = normalize_weights(

[np.random.normal(size=w.shape) for w in origin], origin

)

self.v1_ = normalize_weights(

[np.random.normal(size=w.shape) for w in origin], origin

)

def __call__(self, a, b):

return [

a * w0 + b * w1 + wc

for w0, w1, wc in zip(self.v0_, self.v1_, self.origin_)

]

def normalize_weights(weights, origin):

return [

w * np.linalg.norm(wc) / np.linalg.norm(w)

for w, wc in zip(weights, origin)

]

class LossSurface(object):

def __init__(self, model, inputs, outputs):

self.model_ = model

self.inputs_ = inputs

self.outputs_ = outputs

def compile(self, range, points, coords):

a_grid = tf.linspace(-1.0, 1.0, num=points) ** 3 * range

b_grid = tf.linspace(-1.0, 1.0, num=points) ** 3 * range

loss_grid = np.empty([len(a_grid), len(b_grid)])

for i, a in enumerate(a_grid):

for j, b in enumerate(b_grid):

self.model_.set_weights(coords(a, b))

loss = self.model_.test_on_batch(

self.inputs_, self.outputs_, return_dict=True

)["loss"]

loss_grid[j, i] = loss

self.model_.set_weights(coords.origin_)

self.a_grid_ = a_grid

self.b_grid_ = b_grid

self.loss_grid_ = loss_grid

def plot(self, range=1.0, points=24, levels=20, ax=None, **kwargs):

xs = self.a_grid_

ys = self.b_grid_

zs = self.loss_grid_

if ax is None:

_, ax = plt.subplots(**kwargs)

ax.set_title("The Loss Surface")

ax.set_aspect("equal")

min_loss = zs.min()

max_loss = zs.max()

levels = tf.exp(

tf.linspace(

tf.math.log(min_loss), tf.math.log(max_loss), num=levels

)

)

CS = ax.contour(

xs,

ys,

zs,

levels=levels,

cmap="magma",

linewidths=0.75,

norm=mpl.colors.LogNorm(vmin=min_loss, vmax=max_loss * 2.0),

)

ax.clabel(CS, inline=True, fontsize=8, fmt="%1.2f")

return ax

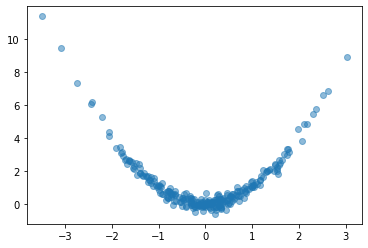

Let’s try it out. We’ll create a simple fully-connected network to fit a curve to this parabola:

NUM_EXAMPLES = 256

BATCH_SIZE = 64

x = tf.random.normal(shape=(NUM_EXAMPLES, 1))

err = tf.random.normal(shape=x.shape, stddev=0.25)

y = x ** 2 + err

y = tf.squeeze(y)

ds = (tf.data.Dataset

.from_tensor_slices((x, y))

.shuffle(NUM_EXAMPLES)

.batch(BATCH_SIZE))

plt.plot(x, y, 'o', alpha=0.5);

model = keras.Sequential([

layers.Dense(64, activation='relu'),

layers.Dense(64, activation='relu'),

layers.Dense(64, activation='relu'),

layers.Dense(1)

])

model.compile(

loss='mse',

optimizer='adam',

)

history = model.fit(

ds,

epochs=200,

verbose=0,

)

grid = tf.linspace(-4, 4, 3000)

fig, ax = plt.subplots()

ax.plot(x, y, 'o', alpha=0.1)

ax.plot(grid, model.predict(grid).reshape(-1, 1), color='k')

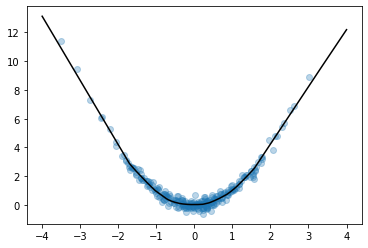

Looks like we got an okay fit, so now we’ll look at a random slice from the loss surface:

coords = RandomCoordinates(model.get_weights())

loss_surface = LossSurface(model, x, y)

loss_surface.compile(points=30, coords=coords)

plt.figure(dpi=100)

loss_surface.plot()

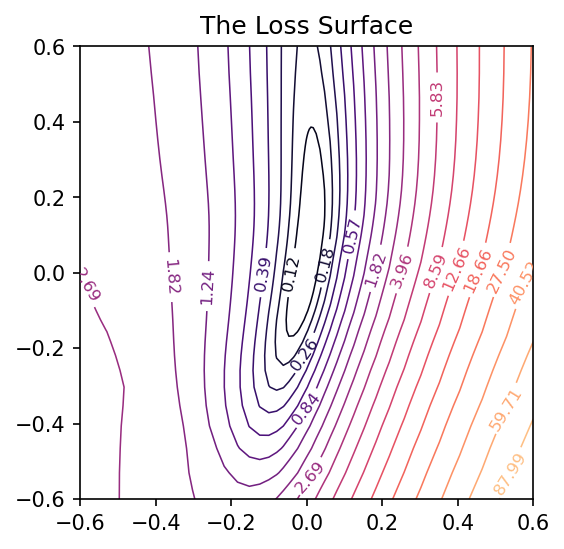

Getting a good plot of the path the parameters take during training requires one more trick. A path through a random slice of the landscape tends to show too little variation to get a good idea of how the training actually proceeded. A more representative view would show us the directions through which the parameters had the most variation. We want, in other words, the first two principal components of the collection of parameters assumed by the network during training.

from sklearn.decomposition import PCA

def vectorize_weights_(weights):

vec = [w.flatten() for w in weights]

vec = np.hstack(vec)

return vec

def vectorize_weight_list_(weight_list):

vec_list = []

for weights in weight_list:

vec_list.append(vectorize_weights_(weights))

weight_matrix = np.column_stack(vec_list)

return weight_matrix

def shape_weight_matrix_like_(weight_matrix, example):

weight_vecs = np.hsplit(weight_matrix, weight_matrix.shape[1])

sizes = [v.size for v in example]

shapes = [v.shape for v in example]

weight_list = []

for net_weights in weight_vecs:

vs = np.split(net_weights, np.cumsum(sizes))[:-1]

vs = [v.reshape(s) for v, s in zip(vs, shapes)]

weight_list.append(vs)

return weight_list

def get_path_components_(training_path, n_components=2):

weight_matrix = vectorize_weight_list_(training_path)

pca = PCA(n_components=2, whiten=True)

components = pca.fit_transform(weight_matrix)

example = training_path[0]

weight_list = shape_weight_matrix_like_(components, example)

return pca, weight_list

class PCACoordinates(object):

def __init__(self, training_path):

origin = training_path[-1]

self.pca_, self.components = get_path_components_(training_path)

self.set_origin(origin)

def __call__(self, a, b):

return [

a * w0 + b * w1 + wc

for w0, w1, wc in zip(self.v0_, self.v1_, self.origin_)

]

def set_origin(self, origin, renorm=True):

self.origin_ = origin

if renorm:

self.v0_ = normalize_weights(self.components[0], origin)

self.v1_ = normalize_weights(self.components[1], origin)

Having defined these, we’ll train a model like before but this time with a simple callback that will collect the weights of the model while it trains:

ds = (

tf.data.Dataset.from_tensor_slices((inputs, outputs))

.repeat()

.shuffle(1000, seed=SEED)

.batch(BATCH_SIZE)

)

model = keras.Sequential(

[

layers.Dense(64, activation="relu", input_shape=[1]),

layers.Dense(64, activation="relu"),

layers.Dense(64, activation="relu"),

layers.Dense(1),

]

)

model.compile(

optimizer="adam", loss="mse",

)

training_path = [model.get_weights()]

collect_weights = callbacks.LambdaCallback(

on_epoch_end=(

lambda batch, logs: training_path.append(model.get_weights())

)

)

history = model.fit(

ds,

steps_per_epoch=1,

epochs=40,

callbacks=[collect_weights],

verbose=0,

)

And now we can get a view of the loss surface more representative of where the optimization actually occurs:

coords = PCACoordinates(training_path)

loss_surface = LossSurface(model, x, y)

loss_surface.compile(points=30, coords=coords, range=0.2)

loss_surface.plot(dpi=150)

All we’re missing now is the path the neural network weights took during training in terms of the transformed coordinate system. Given the weights 𝑊𝑊 for a neural network, in other words, we need to find the values of 𝑎𝑎 and 𝑏𝑏 that correspond to the direction vectors we found via PCA and the origin weights 𝑊𝑐𝑊𝑐.

𝑊−𝑊𝑐=𝑎𝑊0+𝑏𝑊1𝑊−𝑊𝑐=𝑎𝑊0+𝑏𝑊1

We can’t solve this using an ordinary inverse (the matrix [𝑊0𝑊1][𝑊0𝑊1] isn’t square), so instead we’ll use the Moore-Penrose pseudoinverse, which will give us a least-squares optimal projection of 𝑊𝑊 onto the coordinate vectors:

[𝑊0𝑊1]+(𝑊−𝑊𝑐)=(𝑎,𝑏)[𝑊0𝑊1]+(𝑊−𝑊𝑐)=(𝑎,𝑏)

This is the ordinary least squares solution to the equation above.

def weights_to_coordinates(coords, training_path):

"""Project the training path onto the first two principal components

using the pseudoinverse."""

components = [coords.v0_, coords.v1_]

comp_matrix = vectorize_weight_list_(components)

comp_matrix_i = np.linalg.pinv(comp_matrix)

w_c = vectorize_weights_(training_path[-1])

coord_path = np.array(

[

comp_matrix_i @ (vectorize_weights_(weights) - w_c)

for weights in training_path

]

)

return coord_path

def plot_training_path(coords, training_path, ax=None, end=None, **kwargs):

path = weights_to_coordinates(coords, training_path)

if ax is None:

fig, ax = plt.subplots(**kwargs)

colors = range(path.shape[0])

end = path.shape[0] if end is None else end

norm = plt.Normalize(0, end)

ax.scatter(

path[:, 0], path[:, 1], s=4, c=colors, cmap="cividis", norm=norm,

)

return ax

Applying these to the training path we saved means we can plot them along with the loss landscape in the PCA coordinates:

pcoords = PCACoordinates(training_path)

loss_surface = LossSurface(model, x, y)

loss_surface.compile(points=30, coords=pcoords, range=0.4)

ax = loss_surface.plot(dpi=150)

plot_training_path(pcoords, training_path, ax)