poisoned-based-backdoor-attack-suvery

编辑日期: 2024-07-07 文章阅读: 次

Backdoor is also commonly called neural trojan or trojan. In this survey, Backdoor Learning: A Survey by Yiming Li uses “backdoor” instead of other terms since it is most frequently used.

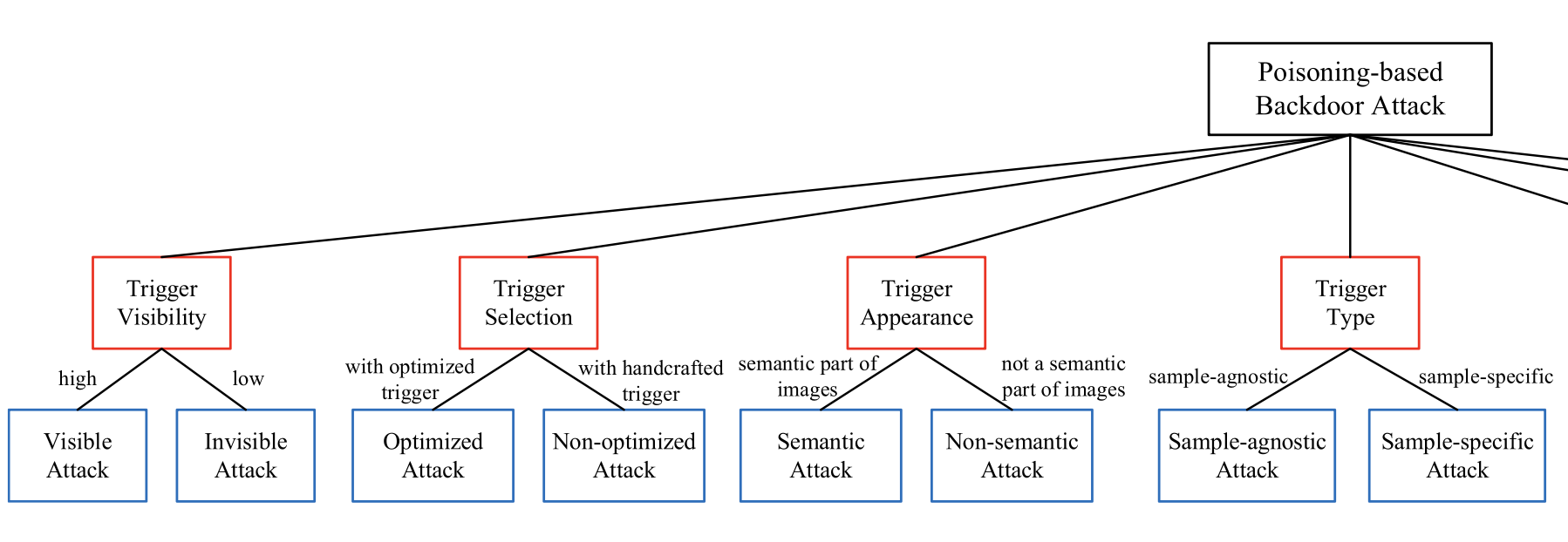

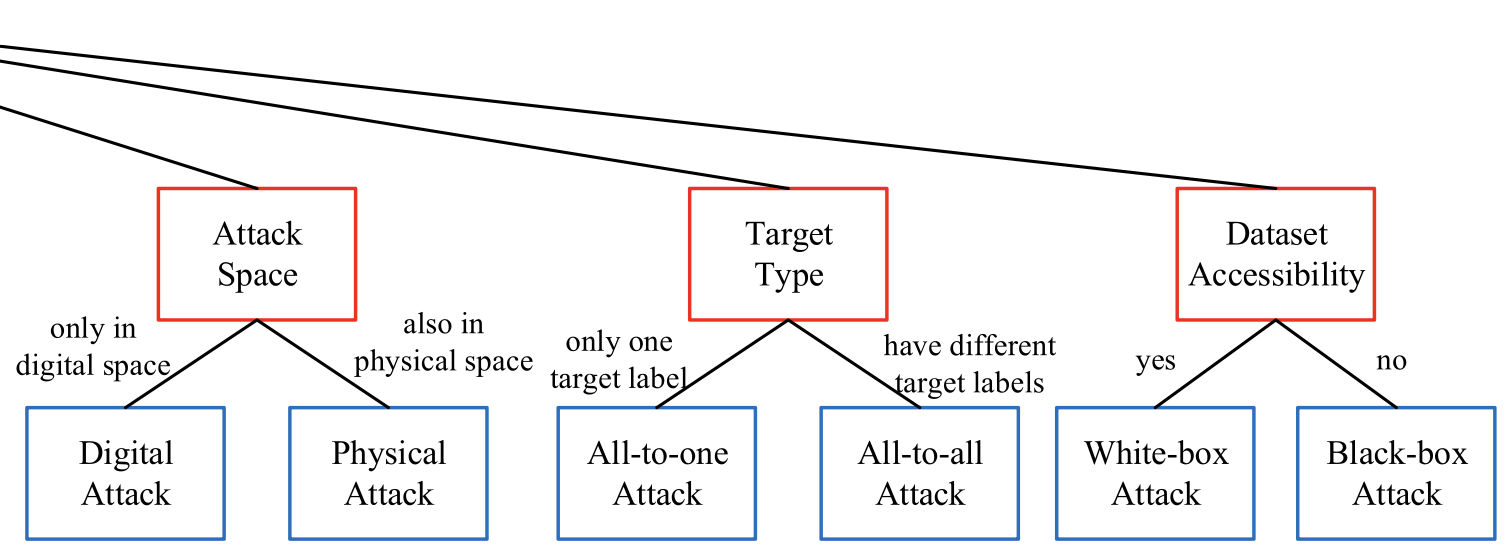

Backdoor attack types

Taxonomy of poisoning-based backdoor attacks with different categorization criteria. In this figure, the red boxes represent categorization criteria, while the blue boxes indicate attack subcategories.

Reference by the paper Backdoor Learning: A Survey by Yiming Li

Part1

Part 2

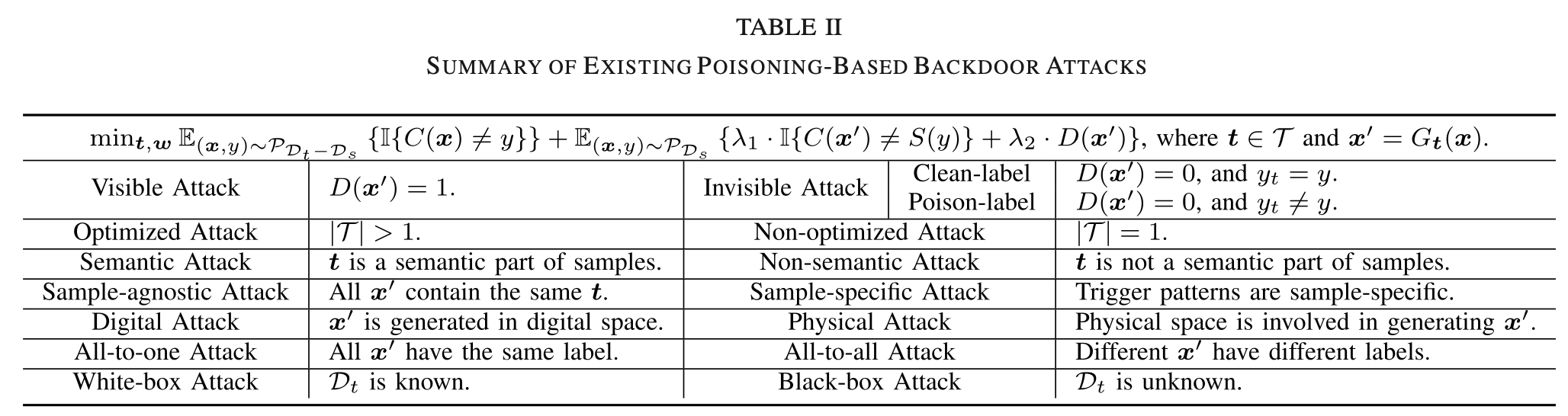

Table

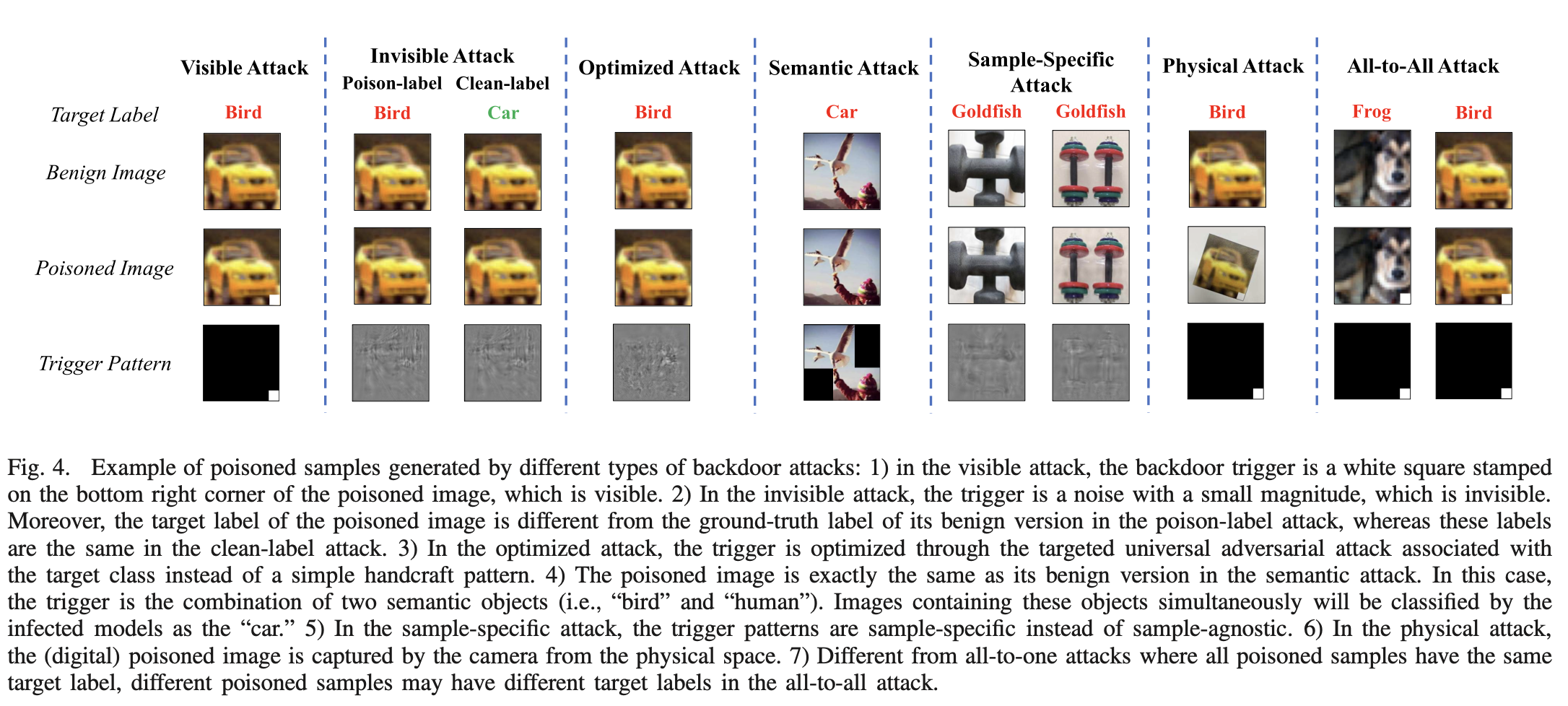

Example of poisoned samples generated by different types of backdoor attacks:

First backdoor attack

BadNets, Gu et al (BadNets: Evaluating Backdooring Attacks on Deep Neural Networks). introduced the first backdoor attack in deep learning by poisoning some training samples. This method was called BadNets.

First Invisible Backdoor Attacks

Invisible Backdoor Attacks, Chen et al (Targeted backdoor attacks on deep learning systems using data poisoning), first discussed the invisibility requirement of poisoning-based back- door attacks. They suggested that the poisoned image should be indistinguishable compared from its benign version to evade human inspection. To fulfill this requirement, they proposed a blended strategy.

Optimized Backdoor Attacks

Triggers are the core of poisoning-based attacks. As such, analyzing how to design a better trigger instead of simply using a given nonop- timized patch is of great significance and has attracted some attention. Liu et al. (Trojaning attack on neural networks) first explored this problem, where they proposed to optimize the trigger so that the important neurons can achieve the maximum values.

First semantic backdoor attacks

Bagdasaryan et al, first explored this problem and proposed a novel type of backdoor attacks (Blind Backdoors in Deep Learning Models), (How to backdoor federated learning), i.e., the semantic backdoor attacks. Specifically, they demonstrated that assigning an attacker-chosen label to all images with certain features, e.g., green cars or cars with racing stripes, for training can create semantic backdoors in the infected DNNs.

Accordingly, the infected model will automatically misclassify testing images containing predefined semantic information without any image modification.

First sample-specific backdoor attack

Nguyen and Tran (Input-aware dynamic backdoor attack) proposed the first sample-specific backdoor attack, where different poisoned samples contain different trigger patterns.

First physical backdoor attack

Chen et al. (Targeted back- door attacks on deep learning systems using data poisoning) first explored the landscape of this attack, where they adopted a pair of glasses as the physical trigger to mislead the infected face recognition system developed in a camera. Further exploration of attacking face recognition in the physical world was also discussed by Wenger et al (Backdoor attacks against deep learning systems in the physical world,). A similar idea was also discussed in (BadNets: Evaluating Backdooring Attacks on Deep Neural Networks), where a post-it note was adopted as the trigger in attacking traffic sign recognition deployed in the camera. Recently, Li et al.(Backdoor Attack in the Physical World) demonstrated that existing digital attacks fail in the physical world since the involved transformations (e.g., rotation, and shrinkage) change the location and appearance of triggers in attacked samples.

Black-Box Backdoor Attacks

Black-Box Backdoor Attacks: Different from previous white-box attacks which required to access the training samples, black-box attacks adopted the settings that the training set is inaccessible. In practice, the training dataset is usually not shared due to privacy or copyright concerns; therefore, black-box attacks are more realistic than white-box ones. In general, black-box backdoor attackers generated some sub- stitute training samples at first. For example, in (Trojaning attack on neural networks), attackers generated some representative images of each class by opti- mizing images initialized from another dataset such that the prediction confidence of the selected class reaches maximum. With the substitute training samples, white-box attacks can be adopted for backdoor injection. Black-box backdoor attacks are significantly more difficult than white-box ones and there were only a few works in this area.

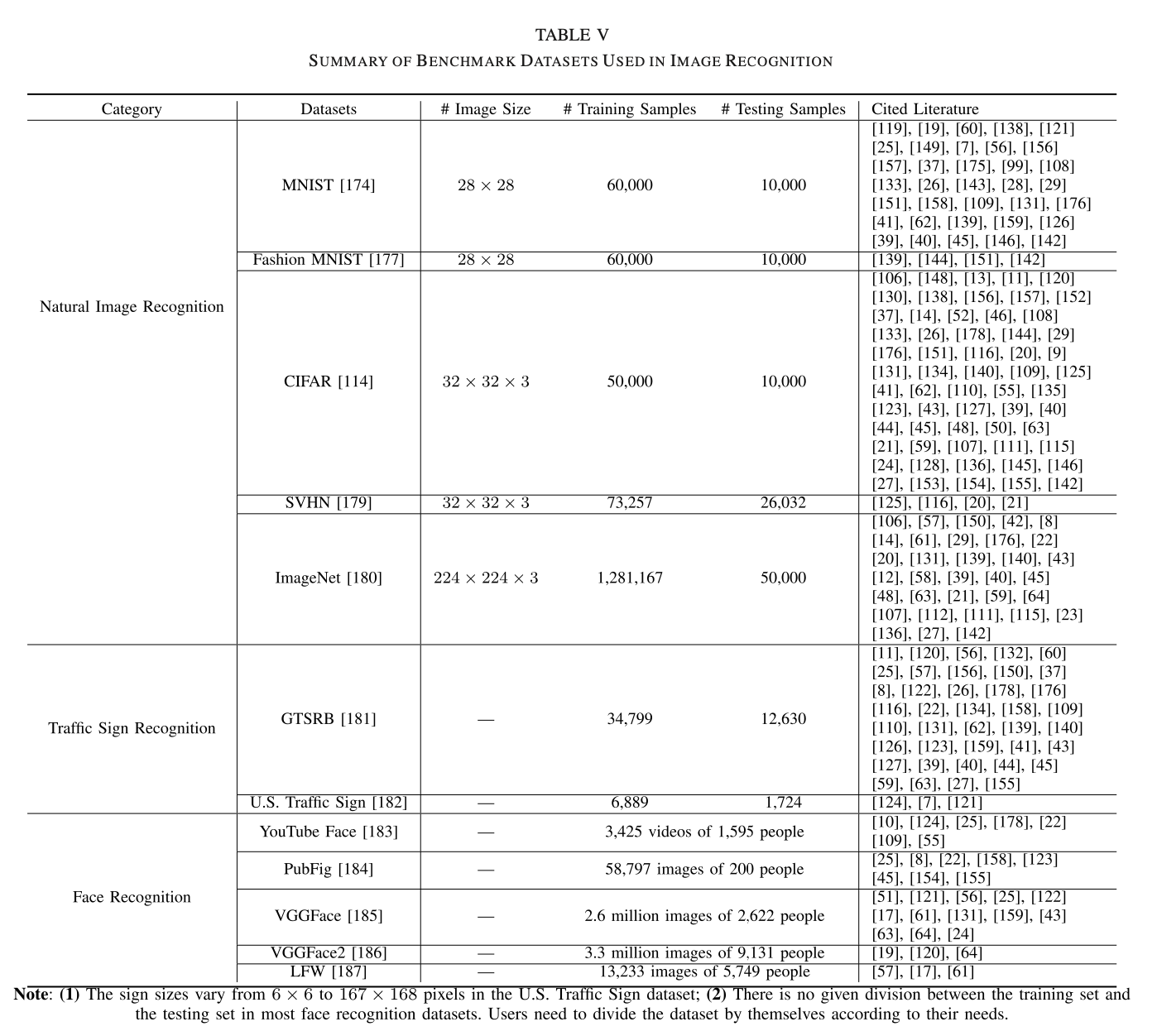

Dataset

summary of benchmark datasets used in image recognition